March 14th, 2016

On March 12nd I held an introduction course on agile project management principles, tools and technques at the Order of the Engineer in Bergamo. Here is the supporting deck I used during the seminar (slides in Italian only).

Share:

Facebook,

Twitter,

LinkedIn

· Tags: agile, project management, slideshare

January 31st, 2016

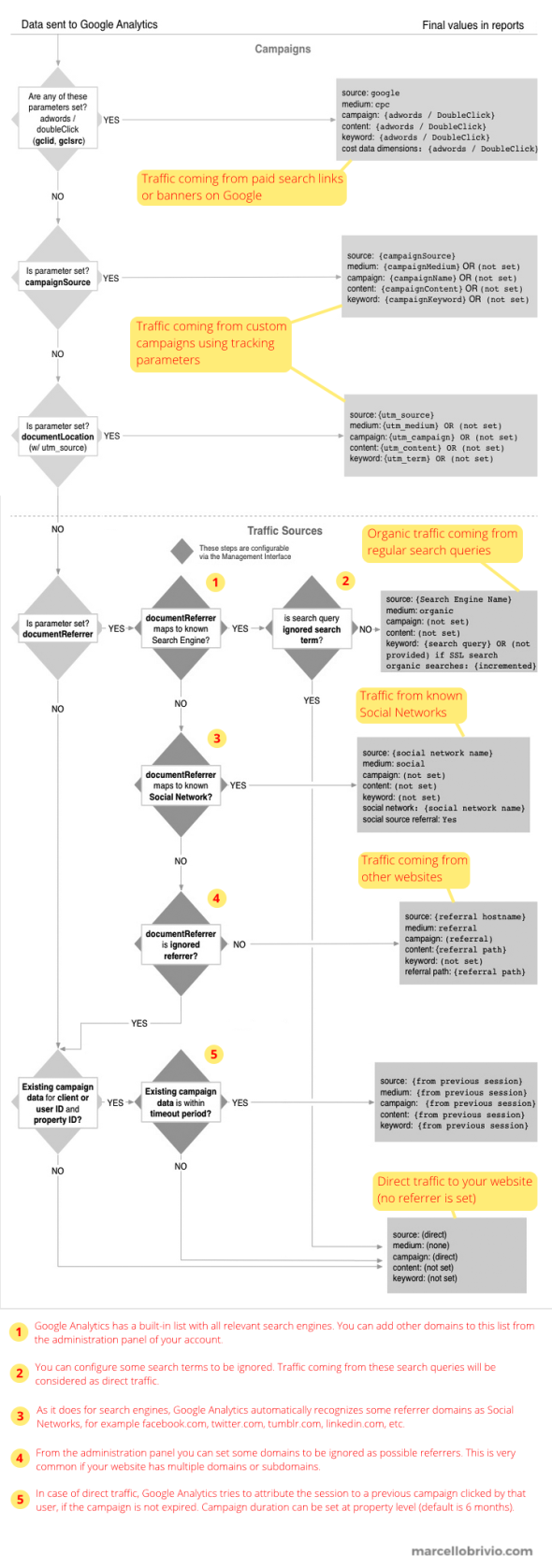

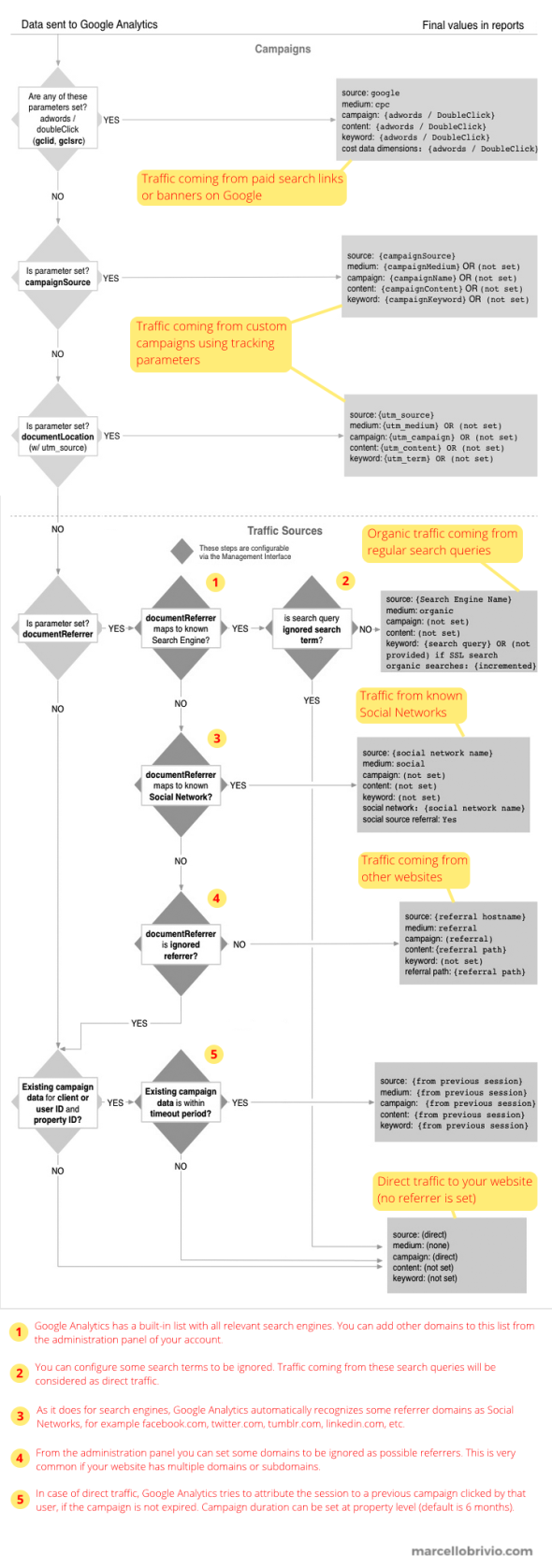

Google Analytics is probably the most popular and widspread web analytics tool on the market. One of its core features is giving clear and detailed insights about where your visitors are coming from. This is done in the “Acquisition” panel, and it represents one of the most important dashboard for any web marketer.

Basically, here you can discover how many of your visitors came to your website directly typing your URL in their browser or clicking on a bookmark (this is called “direct” traffic), how many found your pages through a search query on Google (the “organic” traffic), how many are coming from links posted on social networks, etc.

A more advanced use of the “Acquisition” dashboard requires customized links: adding a set of special “UTM” parameters to your URL allows you to create a specific tracking for any campaign, for example email newsletters, banners, blog posts and any other digital marketing initiative involving a link to your pages. If you pay attention while browsing the Web, you’ll probably notice a lot of URLs like the following example:

http://link.com/?utm_campaign=spring&utm_medium=email&utm_source=newslet2

So, Google Analytics has to manage a lot of possible traffic sources. The following flow chart, taken from its official documentation, explains how it does the source recognition and which is the priority.

Share:

Facebook,

Twitter,

LinkedIn

· Tags: digital marketing, google, web analytics

January 24th, 2016

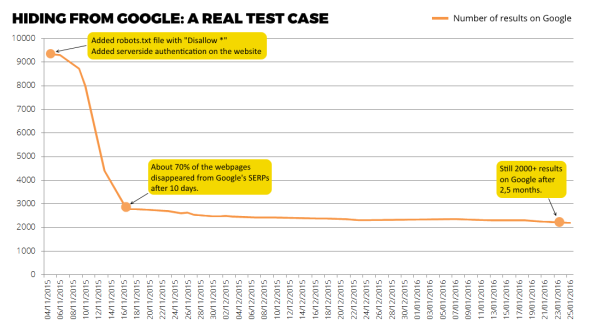

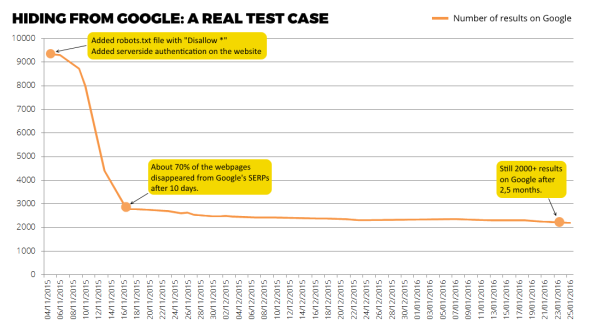

Lots of articles and books have been written on how to increase a website’s visibility on Google, but you won’t find so many resources talking about the opposite task: how to delete your website from any search result page.

I recently had to do this job for a non-critical asset and I had fun in monitoring how much time is required for a website with about 10K indexed pages to be completely forgotten by the most popular search engine.

I started my little experiment with the following 2 actions:

- the robots.txt file has been modified to block any user agent on any page for that website;

- a server-side authentication has been added to the website’s root folder, just to be sure that Google won’t be able to access those URLs anymore.

Then I just sat down and watched my website slowly disappearing from Google’s SERPs. I am surpised about the results: after 10 days, about 70% of the original 10K pages were cleaned, but the rest is taking a lot more. Now, after 2 months and half from the beginning, I still have more than 2K indexed pages.

Note that you can speed up the process with further actions, in particular resubmitting a cleaned XML sitemap or even manually blocking URLs through Google Webmaster Tools. Anyway, it’s very interesting to see how Google handles this kind of situation.

Share:

Facebook,

Twitter,

LinkedIn

· Tags: google, seo